Series Features

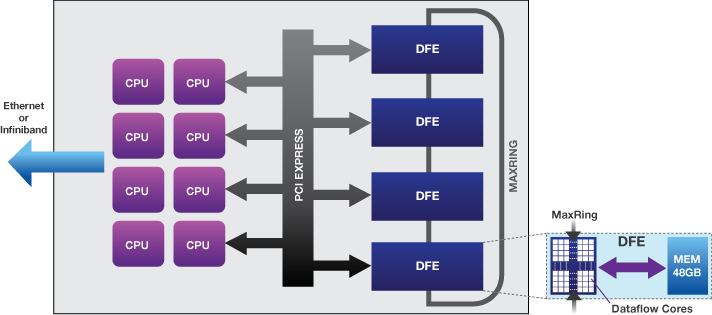

- Integrated multi-core CPUs and DFEs

- 1U form factor for maximum rack density

- IPMI/Lights-out management support

- 2x Gigabit Ethernet data connections

- Optional 10GigE or Infiniband connection

- Fully programmable with MaxCompiler

MPC-C500

- Balanced DFEs, CPUs and I/O

- 4x vectis dataflow engines

- Up to 192GB DFE RAM (48GB per DFE)

- 12 Intel Xeon CPU cores

- Up to 192GB CPU memory

- DFEs connect to CPUs via PCI Express gen2 x8

- MaxRing interconnect between DFEs

- 3x 3.5” disk drives or 5x 2.5” disk drives

MPC-C series nodes provide the closest coupling of DFEs and CPUs for computations requiring the most data transfer.

A 1U MPC-C series machine is a server-class HPC system with 12 Intel Xeon CPU cores, up to 192GB of RAM for the CPUs and multiple dataflow compute engines closely coupled to the CPUs. Each node typically provides the compute performance of 20-50 standard x86 servers, while consuming around 500W, dramatically lowering the cost of computing and creates exciting new opportunities to deploy next generation algorithms and applications much earlier than would be possible with standard servers.

Each dataflow engine is connected to the CPUs via PCI Express, and DFEs within the same node are directly connected with MaxRing interconnect. The node also supports optional Infiniband or 10GE interconnect for tight, cluster-level integration.

MPC-C series node architecture

The MPC-C Series run production-standard Linux distributions, including Red Hat Enterprise Linux 5 and 6. The dataflow engine management software installs as a standard RPM package that includes all software necessary to utilize accelerated applications, including kernel device driver, status monitoring daemon and runtime libraries. All the management tools use standard methods for status and event logging. The nodes support the Intelligent Platform Management Interface (IPMI) for remote management. A status monitoring daemon logs all accelerator health information. User tools provide visibility into accelerator state and system check utilities can be easily integrated into cluster-level automatic monitoring systems.