At Maxeler, we lead in innovation, development and delivery of High Performance Computing solutions. Our solutions include hardware, software and services to gain at least an order of magnitude advantage in performance per unit of rack space, computations per Watt (green computing), and top price-performance considering total cost of ownership for monolithic applications.

Our solutions exploit dataflow computing – a revolutionary way of performing computation, completely different to computing with conventional CPUs. Dataflow computers focus on optimizing the movement of data in an application and utilize massive parallelism between thousands of tiny ‘dataflow cores’ to provide order of magnitude benefits in performance, space and power consumption. An analogy for moving from control flow to dataflow is the Ford car manufacturing model, where expensive highly-skilled craftsman (control flow CPU cores) are replaced by a factory line, moving cars through a sea of single-skill workers (dataflow cores).

Maxeler provides compute solutions to enable production deployment of dataflow computing, including high-performance compute nodes, compilers and management software. Our technology is in use at FORTUNE 500 companies and universities across the world (see our publications for more information).

Computing with a control flow core

In a software application, the program source is transformed into a list of instructions for a particular processor, which is then loaded into the memory attached to the processor. Data and instructions are read from memory into the processor core, where operations are performed and the results are written back to memory. Modern processors contain many levels of caching, forwarding and prediction logic to improve the efficiency of this paradigm; however the model is inherently sequential with performance limited by the latency of data movement in this loop.

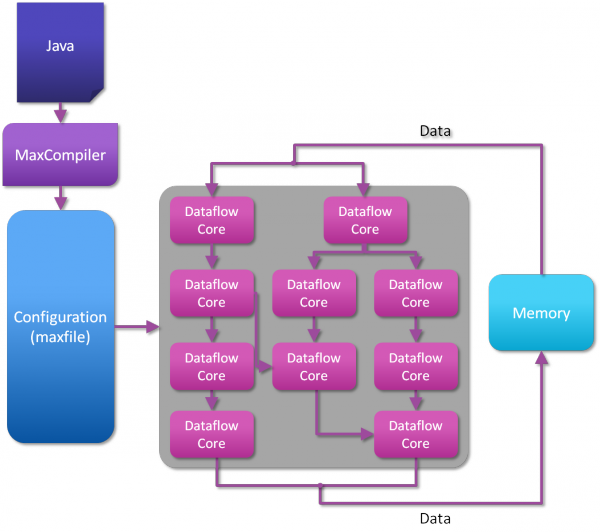

Computing with dataflow cores

In a Dataflow application, the program source is transformed into a Dataflow engine configuration file, which describes the operations, layout and connections of a Dataflow engine. Data can be streamed from memory into the chip where operations are performed and data is forwarded directly from one computational unit (“dataflow core”) to another, as the results are needed, without being written to the off-chip memory until the chain of processing is complete.