Series Features

- Infiniband-connected dataflow engines

- 1U form factor for maximum rack density

- Dual QDR/FDR Infiniband connectivity

- Redundant power supplies

- IPMI/Lights-out management support

- Fully programmable with MaxCompiler

MPC-X2000

- Dense, flexible dataflow compute

- 8x Maia dataflow engines

- Up to 768GB of DFE RAM (96GB per DFE)

- DFEs connected to CPUs via Infiniband

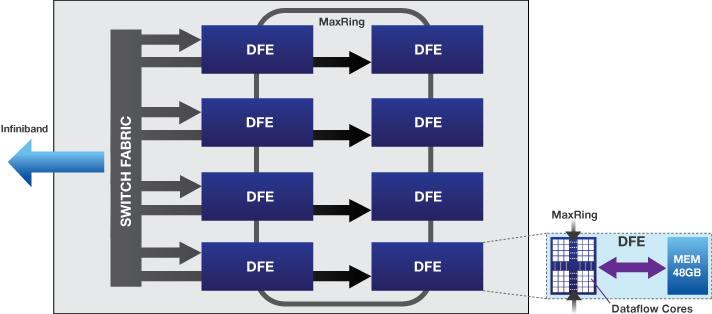

- MaxRing interconnect between DFEs

MPC-X series dataflow nodes provide multiple dataflow engines as shared resources on the network, allowing them to be used by applications running anywhere in a cluster.

“Dataflow as a shared resource” brings the efficiency benefits of virtualization to dataflow computing, maximizing utilization in a multi-user and multi-application environment such as a private or public Cloud service or HPC cluster. CPU nodes can utilize as many DFEs as are required for a particular application and release DFEs for use by other nodes when not running computation, ensuring all cluster resources are optimally balanced at runtime.

Individual MPC-X nodes provide large memory capacities (up to 768GB) and compute performance equivalent to dozens of conventional x86 servers.

The MPC-X series enables remote access to DFEs by providing dual FDR/QDR Infiniband connectivity combined with unique RDMA technology that provides direct transfers from CPU node memory to remote dataflow engines without inefficient memory copies. Client machines can run standard Linux, with Maxeler software.

MPC-X series node architecture

Maxeler’s software automatically manages the resources within the MPC-X nodes, including dynamic allocation of dataflow engines to CPU threads and processes and balancing demands on the cluster at runtime to maximize overall performance. With a simple Linux RPM installation, any CPU server in the cluster can be quickly upgraded to begin benefiting from dataflow computing.

The MPC-X2000 provides eight MAX4 (maia) dataflow engines with power consumption comparable to a single high-end server. Each dataflow engine is accessible by any CPU client machine via the Infiniband network, while multiple engines within the same MPC-X node can also communicate directly using the dedicated high-speed MaxRing interconnect.